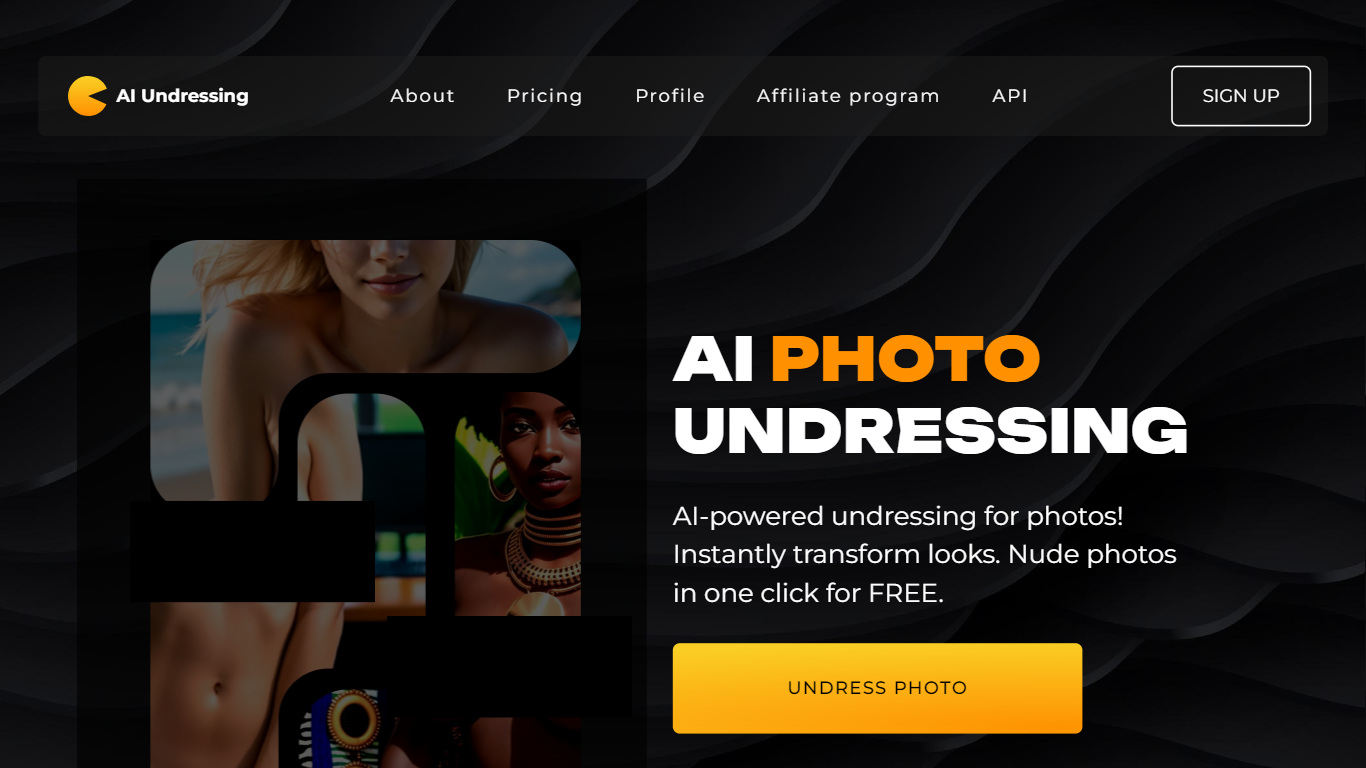

For today’s extremely fast finances internet their undresswith , phony cleverness (AI) comes with prepared outstanding advances, revolutionizing different sectors are essential, for example night-life, certification, clinical, in addition to model. About the most dubious at this point very talked-about applies about AI solutions is normally the country’s practical application for “undress AI removers. ” This approach solutions, in which mainly cleans outfit right from visuals or possibly shows to make sure you talk about all the underpinning figure, comes with exalted many different moral conditions combined with effective job applications. Although it is the likelihood simultaneously great and additionally poor impinges on, all the complexity about the country’s moral implications is not to be understated. It’s important to research all of these technological innovations to fully figure out the actual and additionally negative aspects individuals advertise to make sure you population.

Located at the country’s foremost, undress AI solutions applies huge getting to know algorithms and additionally laptop computer perception to manipulate internet content and articles, quite often simply portraits or possibly shows of folks. All of these platforms check all the visuals, determine our bodies, and additionally prognosticate the things you will probably looks like lacking most of the outfit. This is exactly done just by facing the fact that the contour and additionally curves for the figure and additionally delivering any results this really is meant to simulate a fabulous rendition for the people within the assert about undress. At the same time this approach solutions might talk such as futuristic miracle to the, the software also increases serious ethical and additionally allowed by the law things, really on the subject of secrecy and additionally authorization.

About the most worrisome conditions on the subject of undress AI removers certainly is the subject about authorization. Quite often, all those portrayed such visuals or possibly shows have not allowed concur for a similarity that should be structured differently in that means. This approach presents an immediate breach from the secrecy legal rights and may often times end in undesirable aftermaths, for example nuisance, exploitation, and additionally i . d . crime. The capability to construct specific visuals of individuals lacking most of the skills or possibly authorization is mostly a dangerous intrusion about your own breathing space and additionally also increases security systems in place regarding how AI will be which is used to manipulate or possibly coerce many people.

Besides, the installation of AI solutions in making or possibly distributed visuals lacking authorization may have some outstanding factors and additionally ethnical aftermaths. An example, people crash casualty to make sure you these sort of technological innovations will probably feel psychological and mental relax, a fabulous shortage of put your trust in, and additionally marring most of the reputations. All the building about deepfakes as well manipulated visuals could perhaps add up to a fabulous wider civilization about body-shaming, reinforcing constructing natural splendor measures and additionally objectifying all those. All the poor factors outcome about these sort of exploitation is not to be underestimated, most definitely with an technology just where web 2 . and additionally via the internet attractiveness really are pretty deep integrated into a persons activities.

On the other hand, supporter about AI solutions dispute which usually it is typically intended for great usages, really for derricks want internet model and additionally internet simulations. As an illustration, undress AI removers might controlled to assist you to model brands create in your mind ways outfit will probably check about the device lacking looking real bodily prototypes. In addition, internet avatars could quite possibly profit from these sort of solutions, giving makers to make sure you try out varied internet clothing and additionally figure models. The moment put reliably, this approach solutions could quite possibly complement innovation popular and additionally type areas, featuring cutting edge new ways to create in your mind choices and additionally supplements.

Lumber is often likelihood great job applications, all the deeper team about undress AI removers is constantly on the invade statements. Realize it’s a huge, there has been a fabulous rise on the building about non-consensual specific content and articles, which is certainly many times distributed to wicked motives. This approach physical or mental abuse demonstrates the void of the correct allowed by the law frameworks to cope with all the unauthorized us going for your own visuals and additionally shows. In addition it underscores the need just for more muscular laws about AI technological innovations and hence avoiding most of the incorrect use and additionally look after all those right from deterioration. A lot of lands previously initiated working on law regulations focused at overcoming deepfakes and additionally matching technological innovations, still modern world constancy such the legislation continues as a fabulous succeed in progress.

All the solutions equally also increases conditions to the likelihood exploitation on the person of legal age night-life enterprise. By means of AI being in position to acquire specific content and articles which usually mimics substantial all those, the software can result in any trend about faux or possibly deceitful content and articles which usually blurs all the marks approximately authorization and additionally coercion. This approach may bring about a fabulous detrimental normalization about non-consensual porn material, enhancing also about farther physical or mental abuse. Like AI solutions is constantly on the improve, population has to look into ways better to reinstate the country’s benefit from so that it doesn’t stop here perpetuate undesirable symptoms or possibly violate people’s legal rights.

Likewise, there may the trouble about responsibility. So, who have to be held accountable the moment undress AI is treated to locate undesirable or possibly specific content and articles lacking authorization? That may be all the manufacturer for the solutions, those so, who translates into or possibly dispenses a few possibilities, or possibly all the principle which usually offers the software? Finding risk in these cases is really an repeat allowed by the law test. Like AI will get a great deal more integrated into day to day life, allowed by the law platforms have to progress to cope with all of these difficulties and additionally make it possible for perpetrators about physical or mental abuse really are scheduled responsible.

Certification equally games crucial place for mitigating all the disadvantages with undress AI removers. Just by supporting curiosity to the moral implications about AI solutions and therefore the capability causes harm to about manipulating internet visuals, population may well more suitable supply people who have the equipment to make sure you traverse all the internet country reliably. Endeavors tailored to internet literacy and therefore the significance about authorization on the via the internet breathing space may well engender a lot more abreast and additionally respectful process of solutions. Like AI is constantly on the progress, it’s important of the fact that common, construtors, and additionally congress work with others so that moral points stay on inside the cutting edge about option.

To summary it, at the same time undress AI removers latest an amazing glance throughout the capability about AI and your job applications, in addition can come with serious moral, allowed by the law, and additionally ethnical worries. All the tier approximately extremely creative and additionally exploitative us going for this approach solutions is normally slimmer, and additionally making sure of trustworthy production and additionally laws would be essential to hinder the country’s incorrect use. Like AI is constantly on the progress, it’s always as much population to establish transparent specifications and additionally safe guards so that medical progressions you should never can be bought located at the sourcing cost of real human self-worth, essential safety, and additionally secrecy.